08 Nov 2017

It’s been quiet on this site for a couple of months, and that’s because as usual we’re all heads-down working. So many things to do, so many things. Sometimes however, one has to take a step back and look around, see what’s happening, see what’s around the corner, and see what others are doing.

A few years ago, all of us attended MongoDB “days” in London which were interesting, niche-ish geek-fests. That has really changed now. MongoDB is over a decade old, and at the MongoDB Europe conference today, they officially announced version 3.6, and its impressive feature-set.

Our intrepid loon Ben attended said conference which was well-organised, well-attended, and pretty well-populated with useful content. Particular sessions of note included those on the forthcoming developer-focused product MongoDB Stitch, along with MongoDB Atlas which has been in beta for some time. These satellite offerings look great (oh my: query-able back-ups in Atlas!), but what of the core product?

Well, version 3.6 of MongoDB offers a number of intriguing features, which can be summarised as follows:

- Change streams: invoked with a simple

coll.watch() these are Observers for MongoDB. Fab!

- “Retryable” writes in the event of failures.

- Oodles of document updates including hugely increased “expressibility” in queries, aggregations, pipelining, array processing and updating. These look to be very powerful, and I think Matt will be cock-a-hoop with them.

- R driver to support advanced analytics and business intelligence.

- Support for JSON schema.

- Tunable consistency: customise your app’s balance between read-write consistency needs and overall availability.

You can read more about 3.6 and the future of MongoDB on the official web site.

11 Sep 2017

LDC Via is, in my experience, a unique company: it started out as a group of consultants gathered together to help each other and share skills and experience, and then the Via product grew out of the realisation that so many of the things that we’re asked to deliver did not exist in the market.

That still holds to this day: we don’t have a default answer to every question of “NO” like many fixed products do, but nor do we default to “YES” like the sales teams for every cloud vendor ever (and then bite you later on). What we do is take a platform that we built with care that provides 90% of what we think people will need, and then we mould the rest to match exact requirements. We don’t presume to tell you what you need.

We built LDC Via from various components, not just the current internet darlings and not necessarily the libraries that are most popular and safe at corporations. We built Via with an eye to a solid foundation that will serve both you and us for years to come.

That is what makes the difference: we are all consultants used to dealing with other people’s platforms, and trying to get around the hard “YES” and “NO” of other’s limitations. Now we don’t have to do that; we can change things if it makes sense to do so, and this is a breath of fresh air, something to be proud of as we see the platform grow.

We do not default to “Yes”, we do not default to “No”. Our default is “What do you need?”

29 Aug 2017

Often when I talk with developers who are firmly in the C# or Java camp their main objection to JavaScript is that it’s not strongly typed. And indeed, if you’re new to the world of JavaScript, this is a big thing to get over.

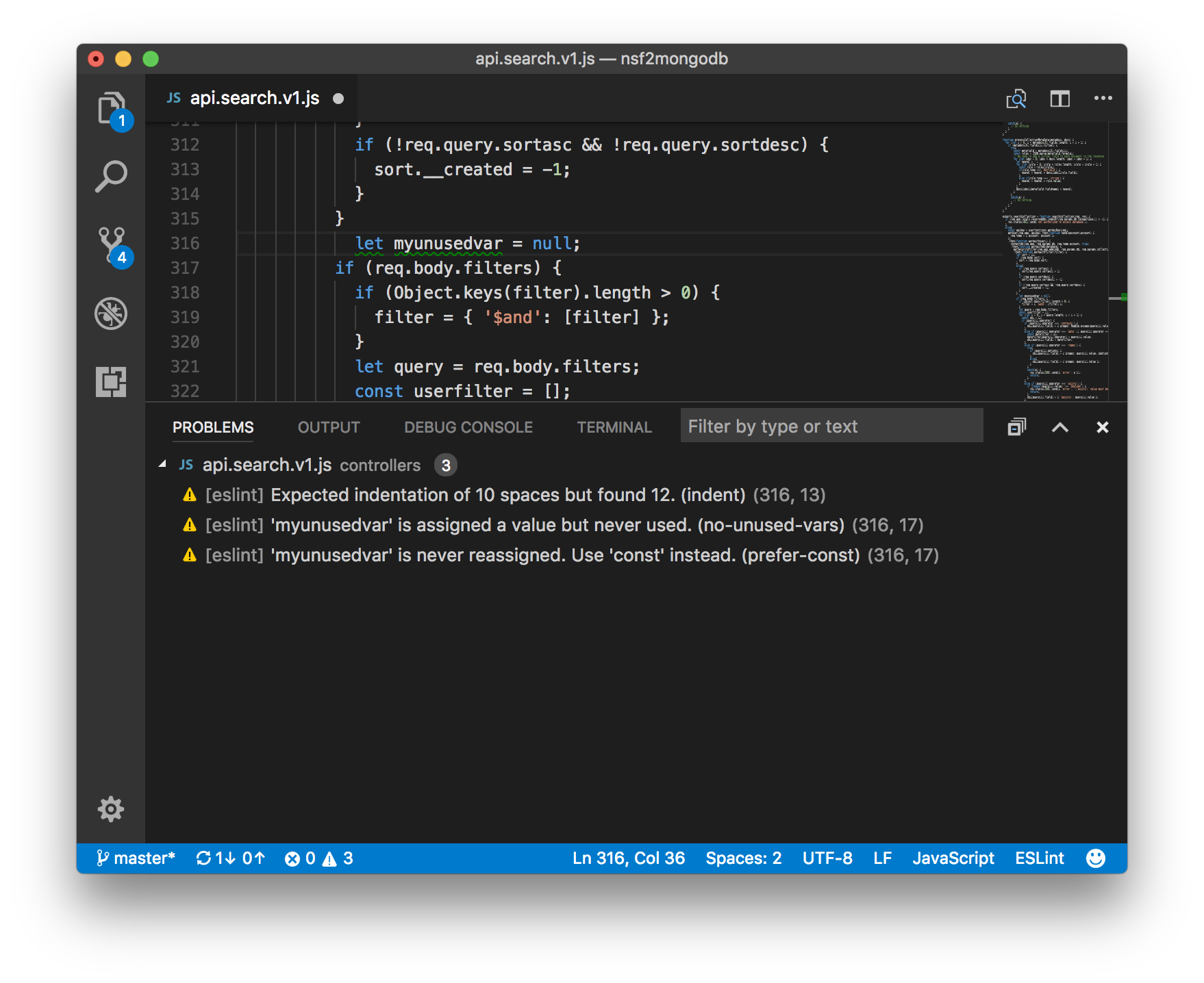

But there are strategies, best practices if you will, which can make the transition easier. One I thought worth mentioning is ESLint. This is a process whereby you statically validate your code to make sure it adheres to a set of rules you define. These rules can be as lax or strict as you like, but, applied properly they make your code more supportable, more readable and less error-prone.

So, how to get started I hear you ask? A lot depends on your working environment. If you use a modern editor like VS Code, Atom or Sublime Text then there will be a plugin you can install to help you along. In your project, you add a .eslintrc file which defines the rules you want to apply and then you look, with horror, at the hundreds of errors that have suddenly appeared in your code.

But fear not, things aren’t as bad as they appear. Your ESLint plugin will probably have a command to automatically fix “easily fixable issues” such as using single quotes rather than double quotes for strings, or indentation of your code. Once these are all addressed you can fix the rest of the issues manually, and relatively quickly.

I’ve recently completed this exercise with a large code base primarily because we want to put some focus on performance: if I have code that is predictably organised, then this makes the process a lot easier.

A great side effect is that the rest of the developers on the team can pick up the code I’ve been butchering and immediately have some familiarity with the style.

The most popular eslint configuration is published by AirBnB as an open source project. It’s a great starting point, but the beauty of these configurations is that if you don’t like a rule then you can simply turn it off. Or, on a case by case basis you can also turn off the rule in your code. As an example, here is the LDC Via .eslintrc file.

Linting your code is not, in itself going to make it great code, but it will be a big help to you and, at the end of the day you can go home with a slightly smug feeling that you’ve gone that extra step to be a good programmer. And who doesn’t like to feel a bit smug sometimes?

23 Aug 2017

The fourth and final post in an occasional series looking at each of our preferred code editors.

A lot has been said, written, and ranted, about programming editors and IDEs over the years. Allow us to add to the noise.

It’s Julian’s turn to finish this series by talking about his choice, Sublime Text…

Before I dive into my choice, let’s have a quick recap of my colleagues’ well-considered picks.

Ben works in Atom. He likes it because it’s clean, flexible, open-source, free, built on top of Electron, and has a huge variety of packages available to allow him to tailor it to his preferences and needs.

Matt works in Visual Studio Code. Despite the “Visual Studio” name, this is a lightweight editor, which he now prefers to Atom, not least for its top-notch integration with things like Git. Like Atom, it’s built on Electron and has a good selection of plug-ins to allow him to customise it.

Mark works in WebStorm. This is the lightweight companion product to IntelliJ IDEA, which he likes for its approach to source code control, amongst other things.

As for me, I work in Sublime Text. Why? Because [a] it’s installed and [b] I’m lazy.

09 Aug 2017

A recent customer requirement was to have a process to import a list of data from CSV and incrementally update an existing table of the same data, adding/updating/deleting rows as required in order to get the two into sync. The production table has extra columns, with the CSV import being simply a list of (for argument’s sake) customer IDs and names. The technology here is .NET and SQL-Server.

In the past we might have used a process like this:

- Import the data into a temporary table

- Use a query to delete/archive any production data rows not present in the new imported list

- Use a query to update any existing production data rows that are different in the imported list

- Use a query to insert any new rows from the imported list into the production data

- Present the results (“[n1] rows added, [n2] rows updated and [n3] rows deleted”) to the UI and/or system logs.

All well and good, and it’s a safe and established way of going about it. However… enter the SQL MERGE command, introduced in SQL-Server 2008. This allows us to perform all of the above data operations in a single SQL statement. Credit to this article (from 2014 - never tell me I’m not bang up to date!) for giving the bare bones on how to go about it, and that is definitely recommended reading for a deeper dive, as is the Microsoft documentation on MERGE. However, for a brief introduction, here is the syntax we’ve used:

CREATE PROCEDURE [dbo].[mergeCustomers]

@tblNewCustomerList CustomerType READONLY

AS

MERGE INTO Customers

USING @tblNewCustomerList NewCustomerList

ON Customers.CustomerID = NewCustomerList.CustomerID

/* For an existing row we just update the name details */

WHEN MATCHED THEN

UPDATE SET Customers.FirstName = NewCustomerList.FirstName,

Customers.LastName = NewCustomerList.LastName

/* For a new row, insert the relevant data */

WHEN NOT MATCHED BY TARGET THEN

INSERT (CustomerID, FirstName, LastName)

VALUES (NewCustomerList.CustomerID, NewCustomerList.FirstName, NewCustomerList.LastName)

/* Row is in the data but not in the upload - delete it */

WHEN NOT MATCHED BY SOURCE

DELETE;

/* Now return the number of rows affected */

SELECT @@ROWCOUNT

RETURN

The first question this raises is: what’s that @tblNewCustomerList parameter? The answer is: it’s a user-defined table TYPE, which allows us to pass an entire “table” of data into the stored procedure. In .NET/C# terms this means we create an in-memory DataTable object, populate it with the contents of the CSV file, and then pass it to the stored procedure. In our case, data lengths and other validation is done in code, in order to ensure (as far as possible) that the data is compliant with any NULL rules or SQL field lengths before being passed, so that SQL won’t reject the entire update.

The C# code is of this form:

DataTable dt = new DataTable();

DataRow row;

int rows affected = 0;

dt.Columns.Add(“CustomerID", typeof(int));

dt.Columns.Add(“FirstName", typeof(string));

dt.Columns.Add(“LastName", typeof(string));

// Then repeated chunks of this sort of thing to add all our data to the datatable

row = dt.NewRow()

row[“CustomerID”] = 1;

row[“FirstName”] = “Julian”;

row[“LastName”] = “Woodward”;

dt.Rows.Add(row);

// Then when we’ve finished constructing the data

string connString = [insert your SQL connection string here];

using (SqlConnection conn = new SqlConnection(connString))

{

SqlParameter param = new SqlParameter("@tblNewCustomerList", SqlDbType.Structured)

{

TypeName = "dbo.CustomerType",

Value = dt

};

using (SqlCommand cmd = new SqlCommand(“dbo.mergeCustomers", conn))

{

cmd.CommandType = CommandType.StoredProcedure;

cmd.Parameters.Add(param);

conn.Open();

rowsaffected = (int)cmd.ExecuteScalar();

}

}

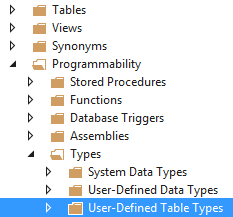

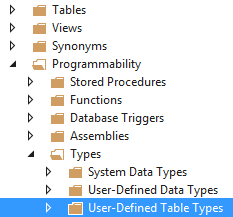

The last piece of the jigsaw, then is this TYPE thing: how do we create that? The answer: go into (I’m using Visual Studio 2015) the “SQL Server Object Explorer” tool (via the [View] menu if it’s not already visible); and open the twisties in the order

Databases -> [your database] -> Programmability -> Types -> User-Defined Table Types

Right-click to add a new one, and then define it pretty much as you would a new table. For instance:

CREATE TYPE [dbo].[CustomerType] AS TABLE

(

[CustomerID] INT NOT NULL,

[FirstName] NVARCHAR (50) NULL

[LastName] NVARCHAR (50) NULL

)

That’s all folks!

Or… is it?

Here’s the thing. That MERGE command is enormously powerful, but the information you get back from it is extremely limited: @@ROWCOUNT will tell you the number of rows it touched. But remember our original pattern, which allowed us to feed back to the to user the individual counts of rows added/updated/deleted? Let’s say we need to find a way to do that.

The first thing to fix is that at the moment we are touching ALL existing rows with the FirstName and LastName, even if they are in fact the same, so let’s change that so that we’re only touching the rows we need to touch. That’s actually a simple change to the piece of syntax that deals with the case when we have a match, replacing

WHEN MATCHED THEN with

WHEN MATCHED

AND Customers.FirstName<>NewCustomerList.FirstName

OR Customers.LastName<>NewCustomersList.LastName

THEN

This now tells us the real number of necessary updates, in @@ROWCOUNT, but still doesn’t give us the separate counts of updates, insertions and deletions that we desire.

One approach might be to have a separate stored procedure which compared the new and existing data and built some reports on what the MERGE command will do when it’s run. But that’s potentially error-prone, particularly if there’s a chance the data will change in between the two SQL statements being executed.

A better approach involves changing the design of the table slightly to add an extra two columns:

MergeBatchID - char(36) - NULL

MergeAction - char(1) - NULL

Within the SQL procedure we can generate a batch ID as a GUID, thus:

DECLARE @batchID AS char(36)

SET @batchID = NEWID()

and then the updates and inserts can set it appropriately:

UPDATE SET MergeBatchID = @batchID,

MergeAction = ‘U'

Customers.FirstName = NewCustomerList.FirstName,

Customers.LastName = NewCustomerList.LastName and

INSERT (MergeBatchID, MergeAction, CustomerID, FirstName, LastName)

VALUES (@batchID, 'C', NewCustomerList.CustomerID, NewCustomerList.FirstName, NewCustomerList.LastName)

Using this we can then have some further SQL within the same stored procedure to build the detail we need. Possibly this would be more efficient done as a GROUP BY query, but for our purposes we’re happy with a couple of quick-running COUNT(*) type queries. Of course, we can’t get the deleted number from the data, because the relevant data has been, um, deleted. But we can derive it from the total returned by @@ROWCOUNT and the Updates/Creates numbers we’ve already extracted:

DECLARE @Total as int

DECLARE @Creates as int, @Updates as int

SELECT @Total = @@ROWCOUNT

SELECT @Creates = Count(*) FROM ClientCompany cc WHERE MergeBatchID=@BatchID AND MergeAction='C'

SELECT @Updates = Count(*) FROM ClientCompany cc WHERE MergeBatchID=@BatchID AND MergeAction='U'

SELECT @Creates as CreatedCount, @Updates as UpdatedCount,

@Total-(@Creates+@Updates) as DeletedCount, @Total as TotalCount

Naturally, the C# code will also need to modified to read the results. But, other than that, there we have it: the MERGE syntax, with full user-friendly stats.

In our production example, which isn’t so different from this, an incremental update of about 10,000 rows of imported CSV takes 1-2 seconds.